×

You've used up your 3 free articles for this month. Subscribe today.

Forensic Science: Reliable and Valid?

Loaded on Oct. 16, 2019

by Jayson Hawkins

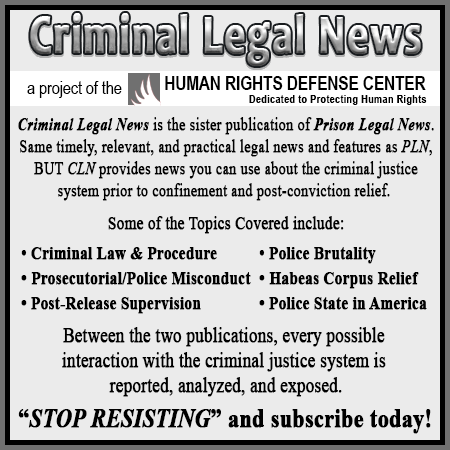

published in Criminal Legal News

November, 2019, page 42

Filed under:

junk science.

by Jayson Hawkins

The headlines have become too familiar: DNA shows wrong person imprisoned for decades-old crime.

Over 300 people have been exonerated by DNA evidence, and that number will only continue to rise as more cases are scrutinized. That begs the question of what has led to so ...

Full article and associated cases available to subscribers.

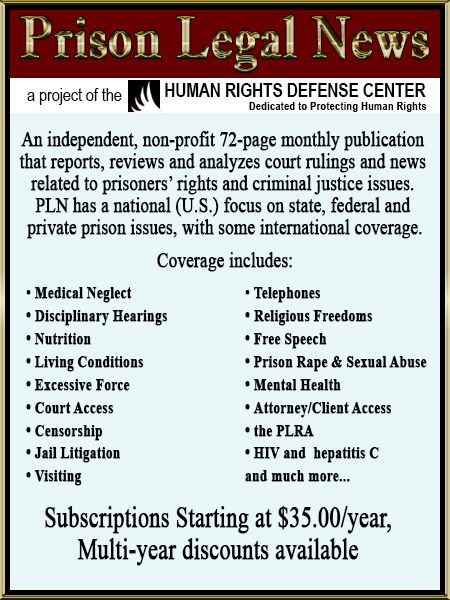

As a digital subscriber to Criminal Legal News, you can access full text and downloads for this and other premium content.

Already a subscriber? Login

More from this issue:

- Forensic Science: Reliable and Valid?, by Jayson Hawkins

- ‘Changes Are a Comin’, by Anthony Accurso

- Kansas Supreme Court: Expired License Plate Doesn’t Attenuate Evidence from Illegal Seizure, by Douglas Ankney

- Defendant’s Flight From Police’s Illegal Frisk Doesn’t Render Improperly Obtained Evidence Admissible in Maryland, by Anthony Accurso

- Costly Electronic Monitoring Programs Replacing Ineffective Jail Bond Systems, by Kevin Bliss

- Ninth Circuit Clarifies When Warrantless Searches of Cellphones at Border Are Reasonable, by Douglas Ankney

- Faulty Science Still Admissible Evidence in Many States, by Kevin Bliss

- Big Brother, Big Business, Big Law Enforcement, by Edward Lyon

- Third Circuit Announces New Rule for Amending § 2255 Motions on Appeal, by Dale Chappell

- Alabama OKs Chemical Castration for Some Sex Offenders, by Dale Chappell

- California Court of Appeal Announces Defendant Convicted of Felony Accessory Is Eligible for Resentencing Under Proposition 64, by Douglas Ankney

- Connecticut Supreme Court: When Expert’s Testimony Asserts Truth of DNA Profile Prepared by a Different Non-Testifying Expert, Confrontation Clause Is Violated, by Douglas Ankney

- Ex-Felons’ Rights Expanding to Include Jury Duty, by Edward Lyon

- Hundreds of Missouri Prisoners May Be Released Under New Sentencing Reform Law, by Dale Chappell

- U.S. District Court Grants Savings Clause Petition, Vacates Mandatory Life Sentence, by Dale Chappell

- Tenth Circuit: District Court Abused Discretion in Denying § 2255 Petition Without Hearing Where Record Didn’t Conclusively Show Defendant Not Entitled to Relief, by Douglas Ankney

- Ninth Circuit: Drug Quantity in PSR Adopted by Sentencing Court not Binding in § 3582(c)(2) Sentence Reduction Proceedings, by Michael Berk

- 10th Circuit: District Court Must Ensure When Defendant Waives Right to Counsel He Understands He’s Required to Adhere to Federal Procedural and Evidentiary Rules, by Douglas Ankney

- Is Data Mining an Invasion of Privacy?, by Kevin Bliss

- Ninth Circuit Rules IAC for Failure to Investigate Mitigating Evidence During Penalty Phase of Capital Trial, by Anthony Accurso

- Fifth Circuit Vacates § 924 Convictions Based on Davis, by Anthony Accurso

- Safe Interactions Between Police and Citizens, by Edward Lyon

- Fifth Circuit Reiterates Diligence Under AEDPA Requires Consideration of Actions Both Before and After Filing of Habeas Petition, by Dale Chappell

- 9th Circuit Says Inadvertently Placing Closed Folding Knife on Teller Counter Not Armed Bank Robbery, by Anthony Accurso

- Oregon Supreme Court Reaffirms ‘Independent Evidence Rule’ for Accomplice Testimony, by Mark Wilson

- Groundbreaking Connecticut Law Tracks Information on Jailhouse Snitches

- Fifth Circuit: First Step Act Doesn’t Permit Plenary Resentencing in Retroactive Application of the Fair Sentencing Act, by Douglas Ankney

- California Court of Appeal Explains Procedures to Determine Appropriate Relief When Conviction Is Vacated Based on People v. Chiu and Senate Bill 1437, by Douglas Ankney

- Connecticut Supreme Court Rules 5 Days Past Due on Rent While Incarcerated Does Not Deprive Defendant of Expectation of Privacy in Home, by Anthony Accurso

- New Hampshire Ends Death Penalty, by Jayson Hawkins

- Fourth Circuit Grants Habeas Relief to Pre-Trial State Prisoner on Double Jeopardy Grounds, by Dale Chappell

- Tennessee Supreme Court Abandons Doctrine of Abatement Ab Initio, by David Reutter

- New Jersey Supreme Court Announces New Test to Determine When State May Obtain Second DNA Sample After Unlawfully Obtained First Sample, by Douglas Ankney

- Fourth Circuit Reviews for Plain Error and Vacates Brandishing a Firearm Conviction Obtained Under 18 U.S.C. § 924(C)(3), by Douglas Ankney

- Fifth Circuit: Practices of Orleans Parish Judges in Collecting Fines and Fees Violates Due Process, by Douglas Ankney

- Tens of Thousands of Sentencing Decisions Are Hidden Within PACER, Hindering Access by Lawyers and Defendants, by Dale Chappell

- Pennsylvania Supreme Court: Probationer Must Violate Specific Condition of Probation or Commit New Crime to Be Found in Violation, by Douglas Ankney

- Seventh Circuit Reverses Convictions Under 18 U.S.C. § 924(c); Holds Underlying Offenses Do Not Qualify as ‘Crimes of Violence’, by Matthew Clarke

- Businesses Are Focusing More and More on Aiding Offenders Reentering Society, by Kevin Bliss

- Qualified Immunity: Explained, by Emily Clark, Amir H. Ali

- The Mass Incarceration Epidemic Viewed Through a Young Daughter’s Eyes, by Hayley Schulman

- Prosecutors Working to Clear Wrongful Convictions With Mixed Results, by Bill Barton

More from Jayson Hawkins:

- Bad Lawyering, Bankruptcy Torpedo Suit Over Delaware Prisoner’s Death, July 15, 2023

- Senators Rail at DOJ Failure to Report In-Custody Deaths, June 15, 2023

- Financial Pressure Finally Brings Police Reform, June 15, 2023

- “Slap On the Wrist” for California Bail Agents Who Hired Bounty Hunter Who Killed Their Client, May 1, 2023

- MTV Documentary Shines Light on Art Behind Bars, May 1, 2023

- Arizona Prisoner Condemned Again for Cellmate’s Murder, May 1, 2023

- U.S. Response to Haitian Crisis: Fund More Prisons, April 1, 2023

- Former State Prison Guards in Georgia Sentenced for Prisoner Assaults and Cover-Up, April 1, 2023

- After Years of Hard Work and Dedication, Adnan Syed Is Freed by Serendipity, March 15, 2023

- Accused War Criminals Training Cops: What Could Go Wrong?, March 15, 2023

More from these topics:

- Texas Court of Criminal Appeals Grants Habeas Relief in ‘Shaken Baby Syndrome’ Case, Feb. 1, 2025. junk science, Habeas Corpus, Forensic Sciences, Child Abuse/Abusers, Evidence - Admissibility.

- Colorado Bureau of Investigation Admits Over 1,000 Cases Affected by DNA Test Misconduct, Feb. 1, 2025. Judicial Misconduct, DNA Testing/Samples, junk science, Forensic Sciences.

- Seeking Justice for Two: The DNA Scandal That Shook a Community, Jan. 15, 2025. DNA Testing/Samples, junk science, Wrongful Conviction, DNA Evidence/Testing.

- Touch-Transfer DNA Remains Misunderstood and Still Poses High Risk of Wrongful Conviction, Dec. 15, 2024. DNA Testing/Samples, junk science, DNA Evidence/Testing.

- Scent of Death Evidence Admitted at Indiana Murder Trial, Dec. 15, 2024. junk science, Forensic Sciences, Murder/Felony Murder, Authencity/Authentication.

- University of Maryland Carey Law Pioneers Forensic Defense Clinic, Nov. 1, 2024. junk science, Forensic Sciences.

- New Research Method Leads to Better Touch DNA Recovery and Development of Genetic Profiles, Oct. 1, 2024. DNA Testing/Samples, junk science.

- DNA Databases, Privacy Concerns, and Noble Cause Bias, Sept. 1, 2024. junk science, DNA Evidence, Bias/Discrimination.

- Years of Warnings Ignored as DNA Analyst at Colorado Crime Lab Allegedly Cut Corners, Her Misconduct Casts Doubt on Thousands of Cases, Sept. 1, 2024. junk science, DNA Evidence.

- Forensic Microbiology and Criminal Investigations, Sept. 1, 2024. junk science, DNA Evidence/Testing.